Tackling discrimination in AI hiring tools: FINDHR presents new solutions

21 January 2026

Proven Risks of Discrimination in AI-Assisted Hiring

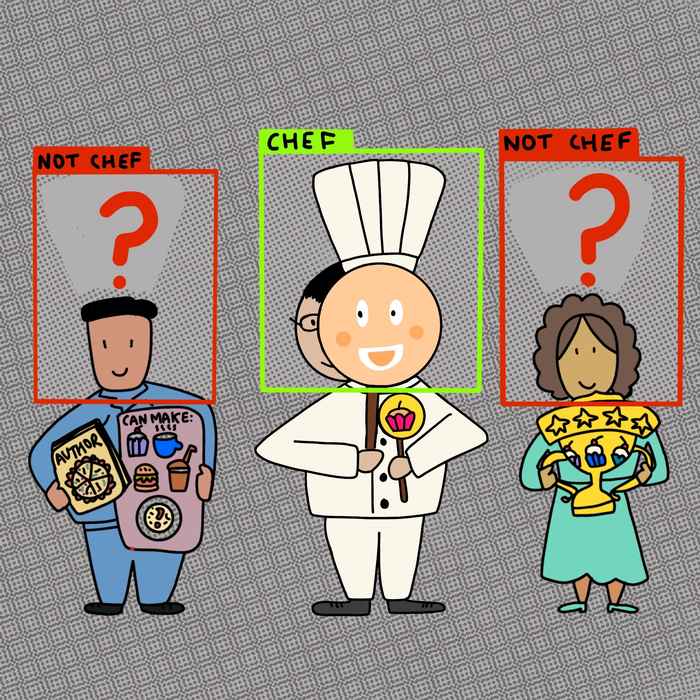

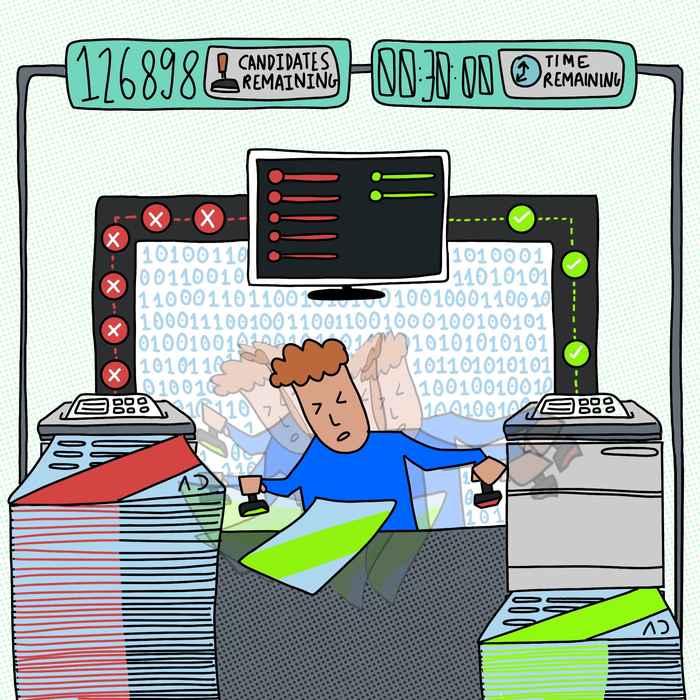

AI-assisted hiring systems promise time savings for HR professionals. However, real-world experiences show that these systems can reinforce existing patterns of discrimination—or create new ones—often without the awareness of those using them. The FINDHR project focuses especially on intersectional discrimination, where combinations of personal characteristics (such as gender, age, religion, origin, or sexual orientation) generate new or multiplied forms of discrimination.

The research demonstrates that discrimination in automated hiring is not a theoretical concern but a lived reality for many. Interviews conducted with affected individuals in seven European countries (Albania, Bulgaria, Germany, Greece, Italy, the Netherlands, and Serbia) revealed feelings of powerlessness and frustration, with applicants often receiving only automated rejections outside working hours, despite strong qualifications and repeated applications.

Embedding fairness into AI hiring systems

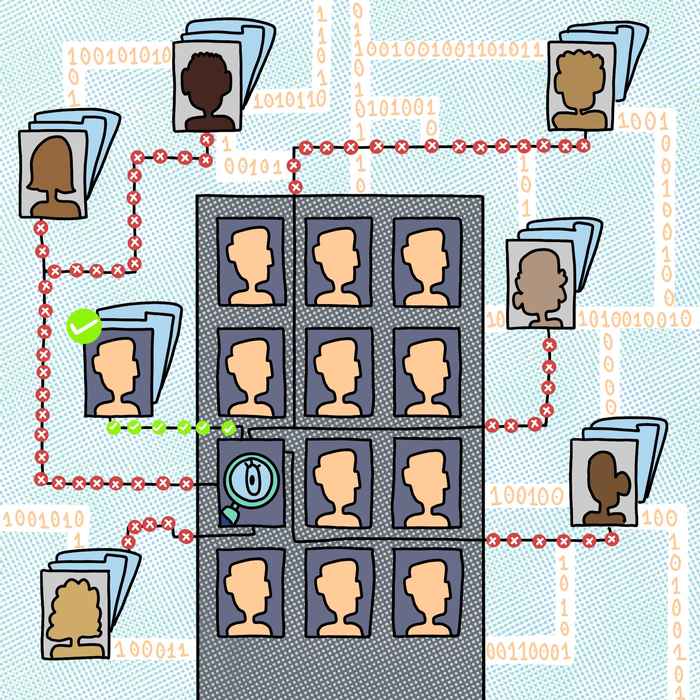

These experiences form the starting point for the project’s technical and practical work. At IRLab, researchers focused on embedding fairness and non-discrimination into the design of ranking and recommendation algorithms used in hiring.

As part of the IRLab team, I assessed which fairness methods in algorithmic hiring systems are usable in recruitment. I focused particularly on candidates who belong to multiple minority groups when I developed fairness-aware and robust learning-to-rank methods. In addition, I contributed to the Software Development Guide, which explains how to design and implement human recommendation systems that comply with legal requirements and uphold fairness and transparency principles.Clara Rus - PhD candidate at IRLab

FINDHR Tools and Solutions

Building on these findings and technical insights, the researchers compiled their results and recommendations in comprehensive toolkits, guidelines and technical tools. Everyone can access the following freely available resources from FINDHR.

Job seekers

Policy makers

Product managers and Algorithmic auditors

Recruitment professionals

Software developers

About the FINDHR project

The FINDHR project represents a comprehensive, interdisciplinary approach to making AI hiring systems fairer, more accountable, and more transparent. For more information, please visit the FINDHR website.